Antivirus Proactive Protection Test (January 2008)

The industry has recently witnessed a shift in emphasis to so-called proactive methods of antivirus protection, which allow antivirus software to combat malicious programs that have undergone modifications and those that are as yet unknown. This development trend is the most promising on the market and almost every developer likes to emphasize just how good their proactive defense is.

The industry has recently witnessed a shift in emphasis to so-called proactive methods of antivirus protection, which allow antivirus software to combat malicious programs that have undergone modifications and those that are as yet unknown. This development trend is the most promising on the market and almost every developer likes to emphasize just how good their proactive defense is.

Table of Contents:

- Intoduction

- Evaluating the effectiveness of proactive antivirus protection

- Results and Awards

- Changes to the effectiveness of proactive antivirus protection over time

- Signatures or Heuristics?

There are even attempts to contrast the newer proactive technologies with the older reactive technologies that use signature-based methods to detect malware and that require continuous and rapid updates of antivirus databases.

The concept of proactive protection is, of course, extremely attractive: a virus hasn’t even appeared and already there is protection against it. But the question arises as to just how effective these technologies are.

It should be noted that proactive technologies encompass a broad range of concepts and approaches, and including them all within the framework of a single test is simply not feasible. In this test we will only compare the heuristic components of antivirus protection (heuristic + generic detection, i.e., extended signatures), without taking into account an analysis of system events (behavior blockers or HIPS).

The results of the test make it possible to say how effective a heuristic analyzer is and in which antivirus product this component performs the best.

As an addendum, a final measurement of the detection level for the collection of malware samples was performed on the updated antivirus software a week after the main test. As a result, the quality of detection for new viruses, as well as the effectiveness of the classical signature-based method of each antivirus program was ascertained in addition to their heuristics.

- Methodology used in testing of proactive antivirus protection »

Introduction

The industry has recently witnessed a shift in emphasis to so-called proactive methods of antivirus protection, which allow antivirus software to combat malicious programs that have undergone modifications and those that are as yet unknown. This development trend is the most promising on the market and almost every developer likes to emphasize just how good their proactive defense is.

There are even attempts to contrast the newer proactive technologies with the older reactive technologies that use signature-based methods to detect malware and that require continuous and rapid updates of antivirus databases.

The concept of proactive protection is, of course, extremely attractive: a virus hasn’t even appeared and already there is protection against it. But the question arises as to just how effective these technologies are.

It should be noted that proactive technologies encompass a broad range of concepts and approaches, and including them all within the framework of a single test is simply not feasible. In this test we will only compare the heuristic components of antivirus protection (heuristic + generic detection, i.e., extended signatures), without taking into account an analysis of system events (behavior blockers or HIPS).

The results of the test make it possible to say how effective a heuristic analyzer is and in which antivirus product this component functions the best.

Evaluating the effectiveness of proactive antivirus protection

Fifteen of the most popular antivirus programs participated in the test to measure the effectiveness of proactive antivirus protection:

- Agnitum Outpost Security Suite 2008

- Avast! Professional Edition 4.7

- AVG Anti-Virus Professional Edition 7.5

- Avira AntiVir Personal Edition Premium 7.0

- BitDefender Antivirus 2008

- Dr.Web 4.44

- ESET NOD32 Anti-Virus 3.0

- F-Secure Anti-Virus 2008

- Kaspersky Anti-Virus 7.0

- McAfee VirusScan Plus 2008

- Panda Antivirus 2008

- Sophos Anti-Virus 7.0

- Symantec Anti-Virus 2008

- Trend Micro Antivirus plus Antispyware 2008

- VBA32 Antivirus 3.12

Testing of the antivirus programs was performed on a Windows XP SP2 operating system from 21 October to 8 December 2007 strictly in line with the methodology for setting up the conditions to check the effectiveness of heuristic analyzers (the update function of all the antivirus products was switched off, i.e., the antivirus databases were frozen on the date the test was initiated).

Diagram 1 and table 1 show the detection results for unknown malware by the different antivirus programs.

Diagram 1: Effectiveness of heuristic analyzers

Table 1: Effectiveness of heuristic analyzers

| Antivirus product | Number of undetected viruses | Detected viruses (%) |

| Avira | 1210 | 71% |

| BitDefender | 1560 | 63% |

| Eset | 1739 | 59% |

| DrWeb | 1793 | 57% |

| Sophos | 1855 | 56% |

| Avast | 2029 | 52% |

| VBA | 2175 | 48% |

| Kaspersky | 2289 | 45% |

| McAfee | 2381 | 43% |

| Symantec | 2583 | 38% |

| AVG | 2637 | 37% |

| F-Secure | 2685 | 36% |

| Trend Micro | 2927 | 30% |

| Panda Security | 3165 | 24% |

| Agnitum (VirusBuster) | 3679 | 12% |

| Total samples in collection: | 4191 |

The out-and-out leader in terms of the effectiveness of the heuristic protection component was Avira AntiVir Personal Edition Premium, whose detection level for unknown malicious programs was very high (71%).

The BitDefender Antivirus heuristic analyzer was also very effective, detecting 65% of the unknown malware. Their results meant that both of these products received the Gold Proactive Protection Award.

The heuristic components in ESET NOD32 Anti-Virus, Dr.Web, Sophos Anti-Virus, Avast! Professional Edition, VBA32 Antivirus, Kaspersky Anti-Virus, and McAfee VirusScan Plus all demonstrated a high level of effectiveness, ranging from 59% to 43% respectively. All those products received the Silver Proactive Protection Award. The first two antivirus programs from this group only missed out on the Gold Proactive Protection Award by 1-3%.

Another 5 products – Symantec Anti-Virus, AVG Anti-Virus Professional Edition, F-Secure Anti-Virus, Trend Micro Antivirus plus Antispyware, and Panda Antivirus – all achieved satisfactory results and received the Bronze Proactive Protection Award.

The only product to fail the test was Agnitum Outpost Security Suite, which appears to lack a heuristic analyzer. It managed a result of only 12%.

Results and Awards

| Award | Products |

Gold Proactive Protection Award |

Avira AntiVir Personal Edition Premium 7.0 (71%) BitDefender Antivirus 2008 (65%) |

Silver Proactive Protection Award |

ESET NOD32 Anti-Virus 3.0 (59%) Dr.Web 4.44 (57%) Sophos Anti-Virus 7.0 (56%) Avast! Professional Edition 4.7 (52%) VBA32 Antivirus 3.12 (48%) Kaspersky Anti-Virus 7.0 (45%) McAfee VirusScan Plus 2008 (43%) |

|

|

Symantec Anti-Virus 2008 (38%) AVG Anti-Virus Professional Edition 7.5 (37%) F-Secure Anti-Virus 2008 (36%) Trend Micro Antivirus plus Antispyware 2008 (30%) Panda Antivirus 2008 (20%) |

|

Failed

|

Agnitum Outpost Security Suite 2008 (12%) |

Changes to the effectiveness of proactive antivirus protection over time

Due to the fact that the new malicious programs were collected over a four-week period (from 5 November to 2 December 2007), it became possible to evaluate how the effectiveness of the various heuristic analyzers changed over time. This entailed the sample collection being split up into four equal parts, each representing a week.

Diagram 2 and table 2 show data on the effectiveness of the heuristic analyzers for the weekly collections.

Diagram 2: Effectiveness of heuristic analyzers (broken down into weeks)

Table 2: Effectiveness of heuristic analyzers (broken down into weeks)

| Antivirus product | Detected viruses (%) | |||

| Week 1 | Week 2 | Week 3 | Week 4 | |

| Avira | 72% | 72% | 65% | 74% |

| BitDefender | 66% | 63% | 56% | 65% |

| Eset | 72% | 58% | 47% | 56% |

| DrWeb | 58% | 53% | 53% | 63% |

| Sophos | 64% | 60% | 44% | 55% |

| Avast | 54% | 51% | 40% | 59% |

| VBA | 57% | 45% | 48% | 43% |

| Kaspersky | 49% | 40% | 42% | 49% |

| McAfee | 45% | 42% | 33% | 50% |

| Symantec | 42% | 36% | 30% | 44% |

| AVG | 47% | 32% | 35% | 34% |

| F-Secure | 38% | 29% | 32% | 43% |

| Trend Micro | 41% | 23% | 21% | 33% |

| Panda Security | 39% | 23% | 20% | 16% |

| Agnitum (VirusBuster) | 17% | 9% | 11% | 12% |

| Total samples in collection: | 4191 | |||

From table 2 it can be seen that the heuristic component in Avira AntiVir Personal Edition Premium, BitDefender Antivirus, Dr.Web and Kaspersky Anti-Virus demonstrated the most stable levels of effectiveness throughout the period when there were no updates, while the effectiveness of the component in ESET NOD32 Anti-Virus, Panda Antivirus and AVG Anti-Virus Professional Edition fell significantly. The drop in the heuristic effectiveness of ESET NOD32 Anti-Virus over the test period can be singled out in particular.

Signatures or heuristics?

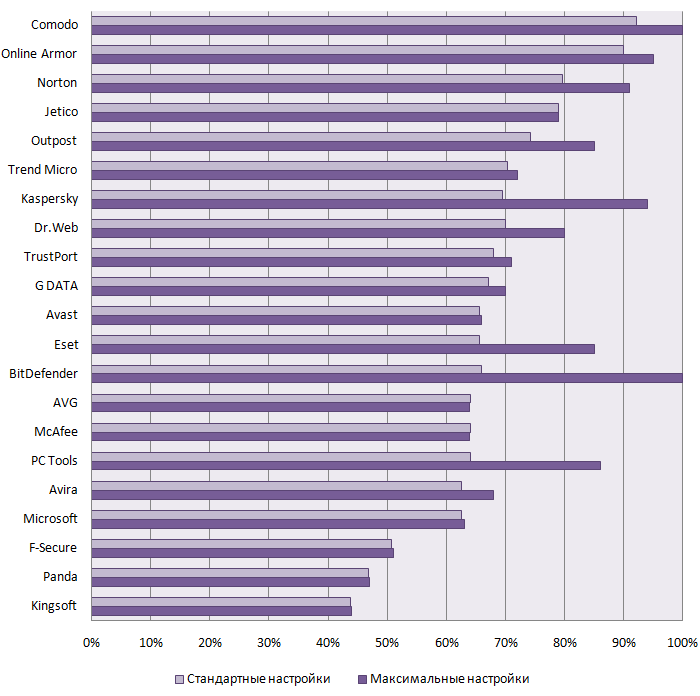

As an addendum to the test, a final measurement of the detection level for the collection of malware samples was performed on the updated antivirus software a week after the main test (8 December 2007). As a result, the effectiveness of the classical signature-based method of each antivirus program was ascertained in addition to their heuristic analyzer. This made it possible to see which role each of the components plays in the detection level for each antivirus program (see diagram 3).

Diagram 3: Effects of different antivirus protection components on the overall level of malware detection

The diagonal line in diagram 3 denotes a 100% level of detection for new malicious programs. Getting close to the line can be achieved through the effective functioning of one of the components of antivirus protection or a combination of the two.

Products in the dark orange (80-100%) and light orange (60-80%) zones demonstrated excellent and good detection levels of new viruses (aged from 1 to 5 weeks, see methodology).

The majority of them – Avira AntiVir Personal Edition Premium, Dr.Web, BitDefender Antivirus, ESET NOD32 Anti-Virus, and Sophos Anti-Virus – attained that level based on the contribution of their proactive component.

Kaspersky Anti-Virus and F-Secure Anti-Virus achieved similar results based on their signature component.

The most balanced in this sense were AVG Anti-Virus Professional Edition, VBA32 Antivirus, Symantec Anti-Virus and McAfee VirusScan Plus, where both components functioned equally effectively (ending up in the lower left-hand square on diagram 3, while the overall detection level for new malware was good or excellent).

Panda Antivirus achieved a satisfactory overall detection level for new malicious programs, but Trend Micro Antivirus plus Antispyware and Agnitum Outpost Security Suite were completely ineffectual against new threats.

Table 3: Quality of new virus detection

| Antivirus product | Detected viruses before update (%) (4 weeks) |

Detected viruses after update (%) | Total % of detected viruses |

| Avira | 71.1% | 21.5% | 92.7% |

| BitDefender | 62.8% | 23.1% | 85.9% |

| Eset | 58.5% | 18.1% | 76.6% |

| DrWeb | 57.2% | 23.8% | 81% |

| Sophos | 55.7% | 12.6% | 68.3% |

| Avast | 51.6% | 24.6% | 76.2% |

| VBA | 48.1% | 31% | 79.1% |

| Kaspersky | 45.4% | 52.2% | 97.6% |

| McAfee | 43.2% | 20% | 63.2% |

| Symantec | 38.4% | 26.2% | 64.6% |

| AVG | 37.1% | 49.2% | 86.3% |

| F-Secure | 35.9% | 62.2% | 98.2% |

| Trend Micro | 30.2% | 0.3% | 30.4% |

| Panda Security | 24.5% | 33.6% | 58.1% |

| Agnitum (VirusBuster) | 12.2% | 1.3% | 13.5% |

Diagram 4: Quality of new virus detection - contribution of different components

F-Secure Anti-Virus (98.2%), Kaspersky Anti-Virus (97.6%), Avira AntiVir Personal Edition Premium (92.7%), BitDefender Antivirus (86%) and AVG Anti-Virus (85.9%) turned out to be the best at detecting new malicious programs.

- Login to post comments